wanx-troopers

2.5D Tools

- GH:PozzettiAndrea/ComfyUI-Sharp quick Gaussian splot from image by Apple

- kijai/ComfyUI-MoGe, GH:microsoft/MoGe

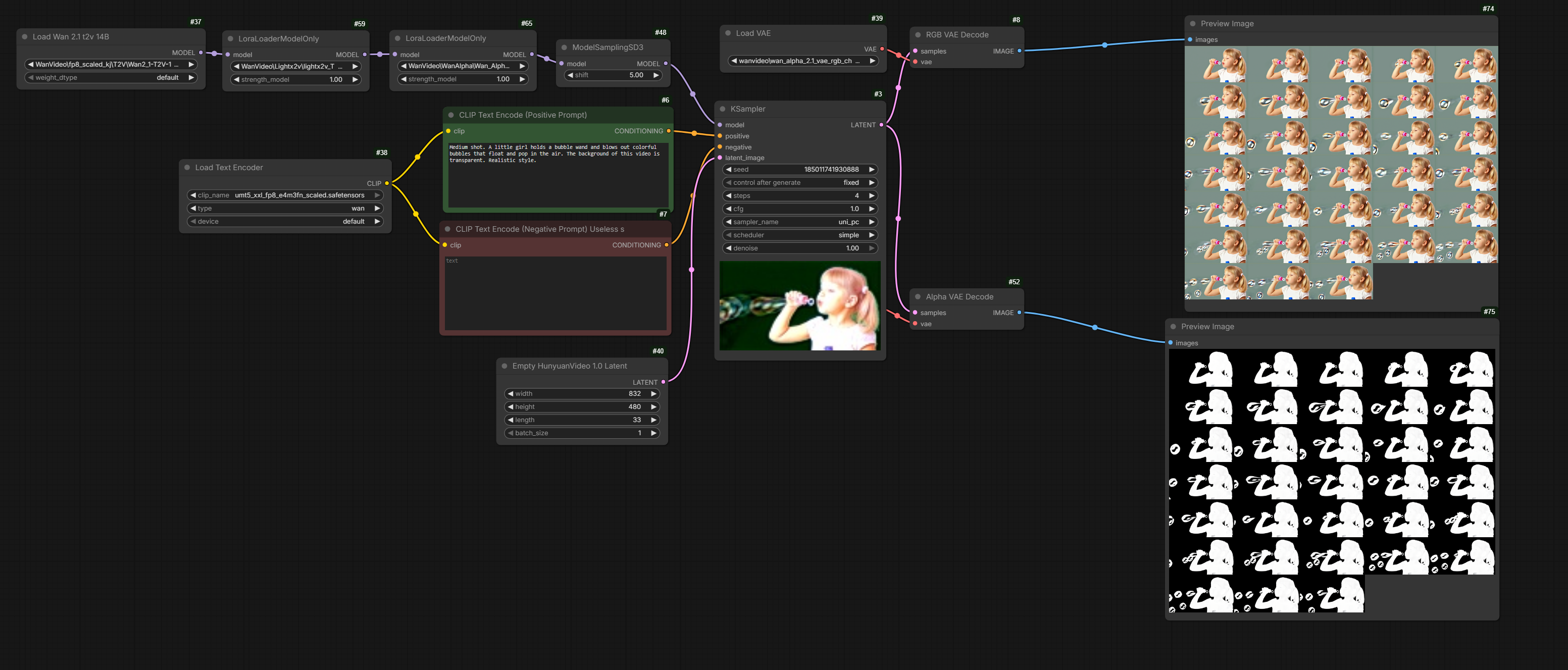

Wan Alpha

Kijai has adapted Wan Alpha “DoRA”: HF:Kijai/WanVideo_comfy:LoRAs/WanAlpha

Decoder needeed for WanAlpha: decoder.bin in the following locations (files have different hashes but same size..)

- HF:htdong/Wan-Alpha version 1.0

- HF:htdong/Wan-Alpha-v2.0

I did not know they originally had 1 file but split it for comfy to 2; ah it’s just the fgr (foreground?) and pha (alpha?) split into two files

Test workflow:

Loops

so if anyone want to use the loop for nodes, do not disable comfyui cache like i do, wasted 30mn figuring those nodes need the cache

Drozbay:

Loops are possible with the current execution flow but are still somewhat fragile and they don’t allow for starting/stopping partial executions. You can’t stop half way through a set of loops, change something for the next iteration, and continue. Indexing with lists is also not super reliable right now. Overall it’s often times easier and more stable to just lean into the practically infinite canvas and just make gigantic workflows. They are large but to me they are simpler to understand than having everything hidden in loops or layers of subgraphs.

Resolution Master

GH:Azornes/Comfyui-Resolution-Master

NAG

- GH:scottmudge/ComfyUI-NAG ComfyUI NAG that supports Z-Image-Turbo as well as well as other new models as of Dec 2025

- GH:scottmudge/ComfyUI-NAG:lumina2_support fork of the above adding Z-Image-Turbo support, outstanding PR

Hmm.. what is NAGGuider from NAG?..

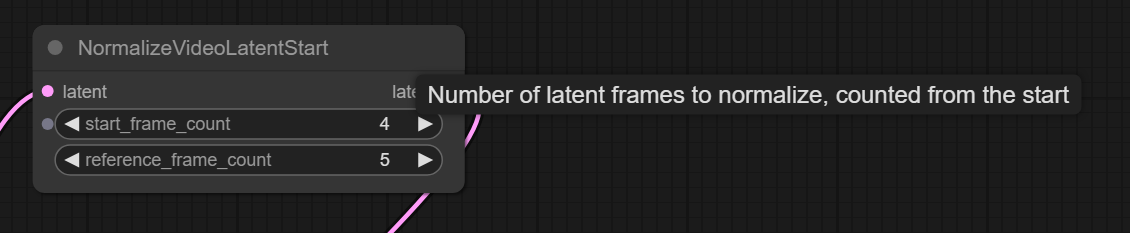

Combating Video Contrast Drift

NormalizeVideoLatentStart

ComfyUI native now has NormalizeVideoLatentStart node which has been lifted out of Kandinsky-5 original implementation.

The node apparently homogenizes contrast and color balance inside the video.

mean/std normalization applied when using I2V

WASWanExposureStabilizer

GH:WASasquatch/WAS_Extras contains among other useful nodes WASWanExposureStabilizer intended for a similar purpose

Pose Retargeter

GH:AIWarper/ComfyUI-WarperNodes

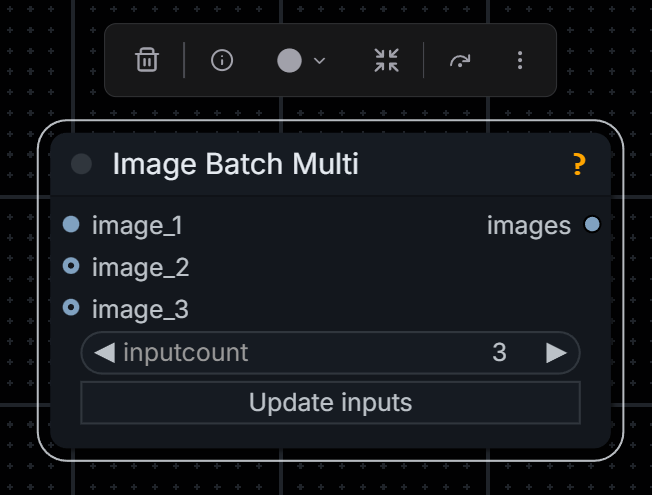

Video Blending From Fragments

kijai/ComfyUI-KJNodes contains Image Batch Extend With Overlap

which can be used to merge together original video with its extension done using I2V or VACE mask extension techniques.

Example of it being used in a LongCat wf: extend-with-overlap.

WanVideoBlender from GH:banodoco/steerable-motion is an alternative.

See also the next section on Trent Nodes

Trent Nodes

TrentHunter82/TrentNodes contains Cross Dissolve with Overlap node

as well as WanVace Keyframe Builder and other nodes for examining videos, taking last N frames, creating latent masks and VACE keyframing.

See also: Qwen Edit - VACE.

Hunyuan Video Foley

github.com/phazei/ComfyUI-HunyuanVideo-Foley

| HF Space | safetensors |

|---|---|

| ComfyUI-HunyuanVideo-Foley | hunyuanvideo_foley_xl |

| ComfyUI-HunyuanVideo-Foley | synchformer_state_dict_fp16 |

| ComfyUI-HunyuanVideo-Foley | vae_128d_48k_fp16 |

More Foley-s

- kijai/ComfyUI-MMAudio

- ACE Foley Generator

Inside of Comfy you could Use Stable Audio or ACE… but tbh both are not that good

Hiding In Plain Sight

Resize Image v2from kijai/ComfyUI-WanVideoWrapper new mode istotal_pixelscopies whatWanVideo Image Resize To Closestfrom kijai/ComfyUI-WanVideoWrapper does which is original Wan logicImage Batch Extend With Overlapfrom kijai/ComfyUI-KJNodes to compose extensions created with VACE extend techniquesVideo Infofrom Kosinkadink/ComfyUI-VideoHelperSuite +Preview Anyto debug dimension errors in ComfyUI etc

Ckinpdx

Ckinpdx a passionate AI artist has shared GH:ckinpdx/ComfyUI-WanKeyframeBuilder repository.

Ckinpdx Wan Keyframe Builder (Continuation)

which provides Wan Keyframe Builder (Continuation) node.

This node was originally intended to prepare images and masks for VACE workflows.

When SVI 2.0 was released the node was updated to facilitate workflows combining VACE keyframing, extensions and SVI references.

The node has two distinct modes of operation: when images output is used and when svi_reference_only output is used.

The modes are toggled by a boolean switch on the node.

Sample wf.

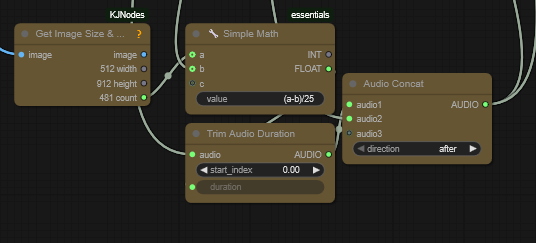

Ckinpdx Load Audtio And Split

Use this node to split audio between generation runs which produce various parts of the video with HuMo.

Use Trim Audio Duration as shown to remove duplicate part of audio before re-assembling the video.

Other Repositories by Ckinpdx

- GH:ckinpdx/ComfyUI-WanSoundTrajectory build paths to feed into Wan-Move based on music beats

- GH:/ckinpdx/ComfyUI-SCAIL-AudioReactive generate audio-reactive SCAIL pose sequences for character animation without requiring input video tracking

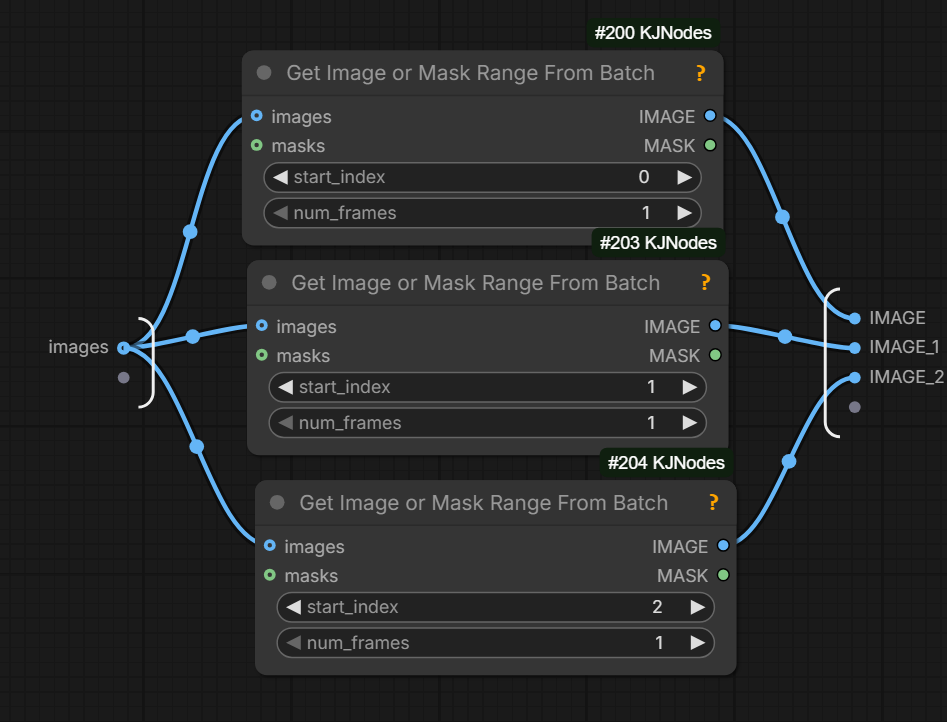

Assemble/Disassemble

Assemble separate images into a sequence

Disasseble equence into separate images

Execution Timer

from GH:PGCRT/CRT-Nodes:

Unilumos

UniLumos is an AI model for relighting a video. Workflows:

Misc

- IndexTTS2: “I had Chatterbox, IndexTTS, another IndexTTS node, Chatterboxt5, VibeVoice … IndexTTS seems a lot better”

- VibeVoice TTS

- GH:filliptm/ComfyUI_Fill-ChatterBox Nodes for ResembleAI’s Chatterbox models: voice cloning, multilingual synthesis, voice conversion.

- GH:diodiogod/TTS-Audio-Suite: tts-text, prepare wf, wf

“done a lot of … vibevoice … problems … tts 2 now … much happier” - GH:BlenderNeko/ComfyUI_Noise tools for working with noise including “unsampling”

- GH:MoonHugo/ComfyUI-FFmpeg nodes for concatenating, converting between png and mp4, splitting/adding audio, vert/horiz stiching of videos

SuperPromptnode from kijai/ComfyUI-KJNodes.Merge Imagesnode from VideoHelperSuite (so called VHS)- GH:stavsap/comfyui-ollama ComfyUI nodes to connect to local-running KoboldCpp executing Qwen3-VL on the CPU in order to tranlate images to descriptions.

- Urabewe/OllamaVision a SwarmUI extension to generate prompts.

- GH:chflame163/ComfyUI_LayerStyle can add film grain to images.

- GH:CoreyCorza/ComfyUI-CRZnodes CRZ Crop/Draw Mask

- GH:elgalardi/comfyui-clip-prompt-splitter quickly coded node for splitting the prompt by line into multiple conditioning noodles - useful for multistage wf-s

Frame Interpolation

Moved here.

Pose Detection

vitpose can do animals as well as humans.

dwpose